What is gRPC?

gRPC (gRPC Remote Procedure Call), originally developed by Google, is a high-performance, open-source framework for remote procedure calls between services in distributed systems. It allows clients to call server-side functions as if they were local procedures, eliminating much of the complexity associated with traditional REST-style request/response handling.

Designed for today's demanding applications, gRPC excels in low-latency scenarios, massive scalability, and real-time data streaming.

The history of gRPC

The concept of Remote Procedure Call (RPC) dates back to the late 1970s and 1980s, with the goal of making remote service calls feel as natural as local function calls.

In 2015, Google open-sourced gRPC as the next-generation evolution of its internal Stubby system, which had powered inter-service communication across thousands of microservices in Google's global data centers since 2001. Today, gRPC is an incubating project under the Cloud Native Computing Foundation (CNCF).

gRPC modernizes RPC by standardizing service contracts with Protocol Buffers and optimizing transport with HTTP/2, resulting in dramatically improved network performance and native support for real-time communication.

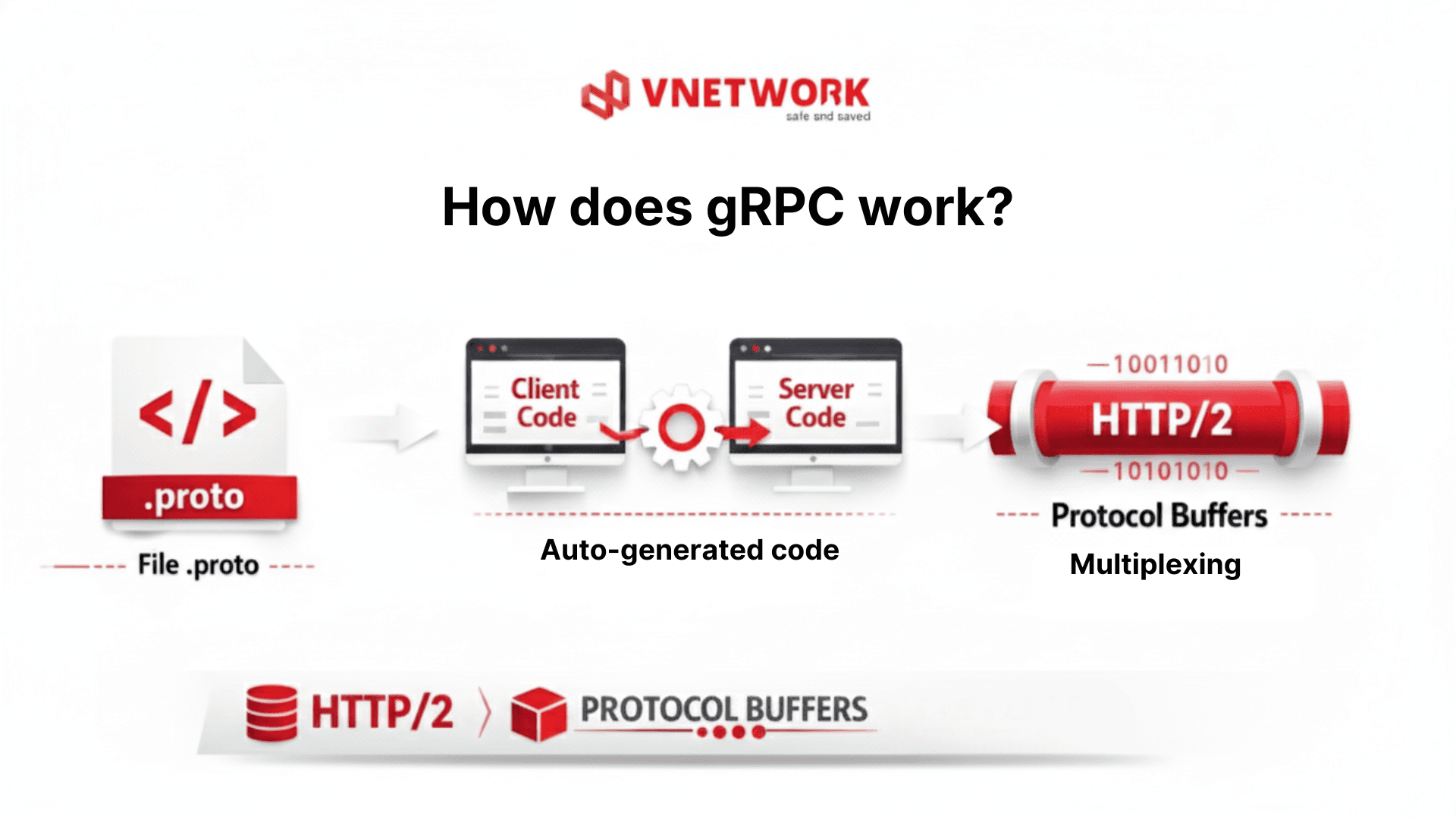

How gRPC works

gRPC leverages HTTP/2 as its transport protocol and Protocol Buffers (Protobuf) as its efficient binary serialization format.

The core workflow is straightforward:

- Define services and data structures in .proto files: Developers describe service methods, message types, and exchange formats in Protocol Buffers definition files (.proto). These files serve as the single source of truth and contract between clients and servers.

- Automatic code generation for multiple languages: The protoc compiler automatically generates client and server stub code in a wide range of programming languages from the same .proto file. This enables seamless interoperability across polyglot microservices without duplicating communication logic.

- Multiplexed communication over HTTP/2: Multiple independent streams run concurrently over a single persistent connection, drastically reducing latency, improving throughput, and ensuring stable performance even under heavy parallel workloads or real-time requirements.

This elegant process standardizes inter-service communication, reduces development complexity in distributed systems, and delivers high performance, scalability, and reliability for modern applications.

What is Protocol Buffers (Protobuf)?

Protocol Buffers (Protobuf) is both the interface definition language and the compact binary serialization format at the heart of gRPC. Because data is encoded in binary rather than text, Protobuf payloads are significantly smaller, faster to transmit, and more efficient to parse than JSON or XML.

Protobuf also supports backward and forward compatibility, allowing schema evolution without breaking existing clients or servers. Its strong typing enforces strict data contracts, reducing runtime errors and improving overall system reliability.

The 4 gRPC service calling methods

gRPC defines 4 main service calling methods for RPC (Remote Procedure Call) communication between client and server. These are fundamental communication models that reflect how data is sent and received in gRPC systems.

- Unary RPC (single call): With Unary RPC, the client sends a single request to the server and waits for a single response. This is the simplest one-to-one communication model in gRPC, operating similarly to traditional HTTP requests.

- Server Streaming RPC (server streams data): In Server Streaming RPC, the client sends one request to the server, but the server returns a continuous stream of responses. This method is suitable when the server needs to send large amounts of data over time, such as status updates or real-time data.

- Client Streaming RPC (client streams data): With Client Streaming RPC, the client sends a stream of requests to the server. After receiving and processing all data, the server returns a single response. This model is often used when the client needs to send multiple records or large data for the server to process aggregately.

- Bidirectional Streaming RPC (bidirectional streaming): Bidirectional Streaming RPC allows the client and server to send and receive data streams simultaneously, operating in parallel and independently. This enables real-time communication scenarios like chat, IoT systems, or applications requiring continuous data exchange.

These models operate smoothly thanks to HTTP/2's multiplexing capability on a single connection, creating the foundation for gRPC to optimize performance when combined with CDN and modern API protection solutions.

Comparing gRPC and REST

gRPC and REST are two popular approaches to building APIs and system communication. Each has its strengths and suits different use cases, from traditional web to modern distributed systems.

The comparison table below helps visualize the differences between gRPC and REST in terms of technology, performance, and application scope.

| No. | Criterion | REST | gRPC |

| 1 | Transport protocol | HTTP/1.1 (primarily) | HTTP/2 |

| 2 | Data format | JSON (text-based, human-readable) | Protocol Buffers (binary, compact) |

| 3 | Communication method | Based on URL + HTTP methods (GET, POST, PUT, DELETE) | Direct function calls (Remote Procedure Call) |

| 4 | Performance | Average, suitable for traditional web | High, low latency, bandwidth-optimized |

| 5 | Streaming capability | Limited, often requires additional solutions | Supports one-way and two-way streaming |

| 6 | Multiplexing | Not well supported | Built-in support via HTTP/2 |

| 7 | Automatic code generation | No unified standard | Generates client/server code automatically from .proto |

| 8 | Language support | Most platforms | Multi-language, optimized for distributed systems |

| 9 | Ease of access | Very easy to learn and debug | Requires higher technical knowledge |

| 10 | Suitable use cases | Public web APIs, third-party integrations | Microservices, internal APIs, mobile, IoT, real-time |

In summary, REST is suitable for public APIs, easy deployment, and accessibility, while gRPC excels in high performance, real-time communication, and scalability in microservices architectures. Choosing gRPC or REST should depend on system goals, technical requirements, and deployment scale.

Key benefits of gRPC

gRPC offers many important benefits, making it a suitable choice for building high-performance APIs in modern distributed systems. Thanks to its schema-driven design, use of Protobuf and HTTP/2, gRPC not only optimizes speed but also significantly improves development and system operations experience.

- High performance and resource savings: gRPC uses Protocol Buffers (Protobuf) to serialize data in binary form, resulting in significantly smaller payloads than JSON. This allows faster data transmission, reduced latency, and bandwidth savings, especially effective for systems with high API traffic or real-time requirements.

- Multi-language support, easy system integration: gRPC allows automatic generation of client and server code for different programming languages from the same .proto file. This enables services using different technologies to communicate uniformly, very suitable for microservices architectures and polyglot systems.

- Strong typing - reduces errors from the development stage: Protobuf applies strict data typing, ensuring data structures are clearly controlled and validated. This allows many data errors to be detected at compile-time rather than runtime, increasing API reliability.

- Easy API extension without breaking existing systems: gRPC allows adding new fields or methods to services and messages while remaining compatible with old clients. This non-disruptive scalability is particularly important in distributed systems where services and clients cannot update simultaneously.

- Automatic code generation, reduces effort and ensures consistency: The protoc compiler automatically generates client/server code based on .proto files, significantly reducing manual coding. This saves development time and ensures consistency between services, minimizing human errors.

- Support for interceptors and middleware for shared functions: gRPC supports interceptors and middleware, allowing integration of functions like authentication, logging, monitoring, and tracing consistently. This keeps business logic clean while applying infrastructure functions synchronously across the system.

Thus, gRPC not only makes systems faster and more efficient but also provides a sustainable, scalable, and manageable API development foundation. These benefits make gRPC increasingly favored in microservices, cloud-native, and modern real-time application architectures.

Common gRPC use cases

Thanks to its high performance, flexible scalability, and real-time communication support, gRPC is particularly suitable for modern systems with strict requirements on speed, stability, and parallel processing.

Below are typical scenarios where gRPC demonstrates clear strengths.

- Multi-language microservices: In microservices architectures, each service can be developed in different languages or platforms. gRPC allows automatic client/server code generation from the same .proto file, enabling multi-language services to communicate uniformly, efficiently, and significantly reducing system integration complexity.

- Real-time applications and data streaming: gRPC supports server streaming, client streaming, and bidirectional streaming, allowing continuous real-time data transmission over a single connection. This is particularly suitable for chat applications, video, financial market data, or instant state synchronization, where low latency is critical.

- Large-scale IoT systems: IoT systems often connect thousands to millions of devices, continuously sending and receiving data. With compact Protobuf payloads and real-time processing capabilities, gRPC reduces bandwidth, optimizes network resources, and ensures fast, stable data collection and analysis.

- Fintech, gaming, and trading platforms: In fields like fintech, online gaming, or trading platforms, requirements for low latency, consistency, and scalability are extremely high. gRPC meets these well with superior performance, bidirectional streaming, and concurrent processing, enabling fast and accurate real-time system responses.

Thus, gRPC is not aimed at every scenario but is the optimal choice for systems needing high performance, continuous communication, and long-term scalability. When combined with CDN infrastructure and API security solutions, gRPC becomes a solid foundation for modern application generations.

Challenges in implementing gRPC

Alongside its superior performance and scalability benefits, gRPC also poses certain challenges in deployment and operations. Understanding these limitations helps enterprises develop appropriate strategies to leverage gRPC effectively and securely.

- Protobuf is more complex than JSON: Protocol Buffers provide high performance but require developers to clearly define data structures and service contracts via .proto files. Compared to JSON - which is flexible and readable - designing Protobuf schemas demands more time and system thinking, especially for teams new to it.

- Requires knowledge of HTTP/2, schema, code generation: To work effectively with gRPC, development teams need to understand concepts like Protobuf, HTTP/2, strong typing, and automatic code generation mechanisms simultaneously. This can create initial barriers, particularly for groups accustomed to traditional REST API development.

- Difficult to debug and analyze data: gRPC data is serialized in binary form, not human-readable like JSON or XML. Debugging, analyzing request/response, or tracking errors thus becomes more complex, requiring specialized tools and appropriate monitoring processes.

- Ecosystem and support tools less rich than REST: Although increasingly popular, the gRPC ecosystem is not as diverse as REST - which has existed longer with a large community. Some libraries, middleware, or specialized tools for gRPC may be harder to find, especially in specific scenarios.

These challenges are why CDN and WAAP infrastructure need full gRPC support. When these infrastructure layers understand and handle gRPC directly, enterprises can significantly reduce technical barriers, simplify operations, and ensure performance and security for gRPC systems in real deployments.

Why CDN & WAAP support for gRPC is an inevitable trend?

As microservices architectures, real-time APIs, and distributed applications become the standard, gRPC is establishing itself as the high-performance communication protocol for modern systems. In this context, CDN and WAAP supporting gRPC end-to-end is no longer just a technical upgrade but an essential step to help enterprises balance performance, stability, and security.

1. Superior performance for systems with high API call frequency

gRPC is built on HTTP/2 with multiplexing capabilities, allowing multiple requests and responses to run in parallel on a single connection. When CDN supports gRPC end-to-end, APIs can be accelerated right from the edge layer, significantly reducing latency and improving response speed. This is especially important for microservices systems where services continuously call each other at high frequency.

2. High stability even during sudden traffic surges

CDN & WAAP understanding and handling gRPC helps limit common issues like timeouts, connection resets, or protocol incompatibility errors. This maintains system stability during traffic spikes, avoids service disruptions, and enhances end-user experience.

3. Powerful streaming for real-time scenarios

One of gRPC's biggest advantages is continuous bidirectional streaming. When fully supported at the CDN & WAAP layer, real-time data streams are transmitted smoothly with minimal interruptions. This unlocks applications like real-time chat, IoT systems, fintech, online gaming, or instant data synchronization, where low latency and continuity are critical.

4. Bandwidth and operational cost savings

gRPC uses Protocol Buffers (Protobuf) - a much more compact binary format than JSON. When combined with CDN, transmitted data volume is significantly optimized, reducing bandwidth consumption and infrastructure costs. For large-scale systems or high API traffic, this is a clear long-term economic advantage.

5. Developer-friendly, quick deployment

CDN & WAAP supporting gRPC does not require enterprises to refactor backend or change existing API designs. Development teams can continue using familiar gRPC architectures while leveraging acceleration and protection from the front infrastructure layer. This shortens deployment time and reduces technical risks.

6. Good compatibility with gRPC-Web for modern web applications

Beyond backend-to-backend environments, gRPC-Web support allows modern web applications to connect directly to gRPC backends via CDN & WAAP. This expands gRPC usage to frontends while maintaining high performance and necessary security.

Thus, CDN and WAAP supporting gRPC not only makes systems faster and more stable but also creates a solid infrastructure foundation for modern, real-time applications with long-term scalability as the business grows.

VNCDN & VNIS - Optimizing performance and security for gRPC

VNCDN from VNETWORK provides global CDN infrastructure with over 2,300 PoPs in 146 countries, supporting HTTP/2, HTTP/3, enabling accelerated gRPC transmission with low latency and high scalability.

VNIS (VNIS WAAP – Web Application & API Protection) is an AI-built security platform that protects gRPC APIs against DDoS Layer 3/4 and Layer 7, malicious bots, and OWASP Top 10 risks. AI plays a central role in identifying, predicting, and blocking attacks right from the edge network infrastructure layer, before they reach the backend.

The combination of VNCDN + VNIS helps enterprises accelerate gRPC while providing comprehensive API security.

FAQ - Frequently Asked Questions about gRPC

1. What is gRPC?

gRPC is a high-performance service communication framework that uses HTTP/2 and Protobuf to transmit data quickly, stably, and bandwidth-efficiently for distributed systems.

2. How is gRPC different from REST?

REST uses JSON, easy to read but slower. gRPC uses binary Protobuf, direct function calls, suitable for microservices and real-time.

3. Does gRPC replace REST?

Not completely. REST is still suitable for traditional web. gRPC fits internal systems, real-time APIs, and cloud-native architectures.

4. Does CDN support gRPC?

Yes. Modern CDNs like VNCDN support HTTP/2, helping accelerate and stabilize global gRPC connections.

5. How to secure gRPC?

Need WAAP to protect APIs against DDoS, bots, and Layer 7 attacks. VNIS uses AI for 24/7 monitoring and risk prevention.